Introduction#

I have been looking for a lightweight image tile server recently. Last week, I was reviewing the tile service provider based on @Vincent Sarago's own tools rio-tiler, lambda-proxy. I looked at several derivative projects, including lambda-tiler, landsat-tiler, rio-viz, etc. After some simple tests, I felt that the first two tools both require the use of Lambda to perform normally, while the third application framework uses Tornado, which considers concurrency issues, but is a single-machine application, making it quite a project to "port." I tried it myself but gave up. In a single-node situation, the request blocking issue is very serious. I tried several combinations of applications and servers, but there wasn't much improvement. Additionally, in a single-node situation, this method of re-accessing data for each request is not economical.

Simple applications won't work. I saw the Geotrellis project on the COG detail page, which is implemented in Scala. I found an experimental project in the projects that closely matched my needs, cloned it, and ran it, but it didn't succeed. It seems that the application entry point has changed, and I failed. I was too lazy to modify it (didn't know how to change it), so I thought about finding a small example in the quick start to run a tiles service, which should be quite easy (not!). The experience for beginners using Scala in China is really difficult, even harder than Golang. The build tool SBT pulls files from the Maven central repository at a turtle-like startup speed. I found a few sparse articles on how to switch to domestic sources, and at one point I felt like throwing up 🤮. Finally, after switching to Huawei Cloud's source, it was finally acceptable. The strange syntax of sbt.build made me persist until I reached the IO image, but the latest version of the API was completely different from the docs. I modified it according to the API, but after being tripped up by the devilish implicit parameters for more than ten minutes:

$ rm -rf repos/geotrellis-test ~/.sbt

$ brew rmtree sbt

I left.

Terracotta#

The GitHub feed is really a great thing; it recommended many useful items to me, including Terracotta (it's too hard to type, so I'll just call it Terracotta). The official description is as follows:

Terracotta is a pure Python tile server that runs as a WSGI app on a dedicated webserver or as a serverless app on AWS Lambda. It is built on a modern Python 3.6 stack, powered by awesome open-source software such as Flask, Zappa, and Rasterio.

It provides both traditional deployment and Lambda options, is lightweight, pure Python, and fits my taste. The "tech stack" is also relatively new.

Compared to lambda-tiler, which is also based on function computing, Terracotta is simpler in both structure and understanding. The entire process of the latter is very straightforward, based on the COG's portion request characteristics and GDAL's VFS (Virtual File Systems). No matter where your data is or how large it is, as long as you tell me its local address or HTTP address, it can pull slices in real-time. In the Lambda environment, this method won't have much performance issue. However, there are two problems for use and deployment in China.

- AWS is seriously incompatible in China, creating obstacles for using Lambda domestically. Domestic vendors like Aliyun also have function computing services, but they are not very mature yet, and the cost of porting proxies, etc., is quite high.

- Some open access data, such as Landsat 8 and Sentinel-2, are hosted on S3 object storage. Using Lambda for slicing largely relies on fast access to various AWS components, but if services are provided in China, the access speed will be greatly affected.

Of course, Terracotta is also recommended to be deployed on Lambda functions. Indeed, this method is very suitable for dynamic slicing services, but compared to Lambda-tiler, it adds an easy-to-use and reliable header file "caching mechanism."

When using rio-tiler to implement a dynamic slicing service that can be quickly deployed on a single machine, supporting a few users and low requests, I once thought about caching the header files of the same-origin data in memory. Since each tile needs to request the source data to obtain the header file, it is quite wasteful in a single-machine environment. At that time, I thought of building a dict to store the header files based on the data source address or creating an SQLite database to store them. I tried building a dict, but the effect was not obvious.

Terracotta, on the other hand, has forcibly incorporated this point into its business process design, which means that there will be a preprocessing process when adding new data. This is slower than direct processing, but as the saying goes, sharpening the axe does not delay the work of chopping wood. I must say that this is much faster than traditional pre-slicing.

In addition, Terracotta has good API support for processes like data COGification and header file injection.

Quick Start#

Trying it out is very simple. First, switch to the environment you are using, then

$ pip install -U pip

$ pip install terracotta

Check the version

$ terracotta --version

$ terracotta, version 0.5.3.dev20+gd3e3da1

Enter the target folder where the TIF files are stored and optimize the images in COG format.

$ terracotta optimize-rasters *.tif -o optimized/

Then store the images you want to serve in the SQLite database file based on pattern matching.

Here I want to complain about this feature. At first, I thought it was a general regex match, but after a long time, I found out it was a simple match with {}, and it couldn't be used without matching. It was quite frustrating.

$ terracotta ingest optimized/LB8_{date}_{band}.tif -o test.sqlite

After the database injection is complete, start the service

$ terracotta serve -d test.sqlite

The service starts by default on :5000 and also provides a Web UI, which needs to be started separately in another session:

$ terracotta connect localhost:5000

This way, the Web UI will also start, and you can access it at the prompted address.

Deployment#

I didn't look at the deployment method for Lambda because it's roughly similar to the lambda-tiler method. Since AWS access is severely limited in China, and the cost of porting to Aliyun or Tencent Cloud's serverless is too high, I gave up this method.

The traditional deployment method is as follows:

I deployed it on a CentOS cloud host, which is quite similar to the docs.

First, create a new environment, install the software and dependencies.

$ conda create --name gunicorn

$ source activate gunicorn

$ pip install cython

$ git clone https://github.com/DHI-GRAS/terracotta.git

$ cd /path/to/terracotta

$ pip install -e .

$ pip install gunicorn

Prepare the data. For example, assume the image files are stored in /mnt/data/rasters/

$ terracotta optimize-rasters /mnt/data/rasters/*.tif -o /mnt/data/optimized-rasters

$ terracotta ingest /mnt/data/optimized-rasters/{name}.tif -o /mnt/data/terracotta.sqlite

Create a service. Here I encountered two pitfalls. The official example uses Nginx to reverse proxy to the sock, and I tried multiple methods without success and didn't want to delve deeper.

server {

listen 80;

server_name VM_IP;

location / {

include proxy_params;

proxy_pass http://unix:/mnt/data/terracotta.sock;

}

}

Another issue was that the entry point in the application had been updated, and the version in the service was different from the context. After modification, it looks like this:

[Unit]

Description=Gunicorn instance to serve Terracotta

After=network.target

[Service]

User=root

WorkingDirectory=/mnt/data

Environment="PATH=/root/.conda/envs/gunicorn/bin"

Environment="TC_DRIVER_PATH=/mnt/data/terracotta.sqlite"

ExecStart=/root/.conda/envs/gunicorn/bin/gunicorn \

--workers 3 --bind 0.0.0.0:5000 -m 007 terracotta.server.app:app

[Install]

WantedBy=multi-user.target

Another place is to use "0.0.0.0" to allow external access.

The official explanation is as follows:

- Absolute path to Gunicorn executable

- Number of workers to spawn (2 * cores + 1 is recommended)

- Binding to a unix socket file

terracotta.sockin the working directory- Dotted path to the WSGI entry point, which consists of the path to the python module containing the main Flask app and the app object:

terracotta.server.app:app

The service needs to specify the execution path of Gunicorn, set the number of workers, bind the socket file, and specify the application entry point.

Set it to start on boot and start the service.

$ sudo systemctl start terracotta

$ sudo systemctl enable terracotta

$ sudo systemctl restart terracotta

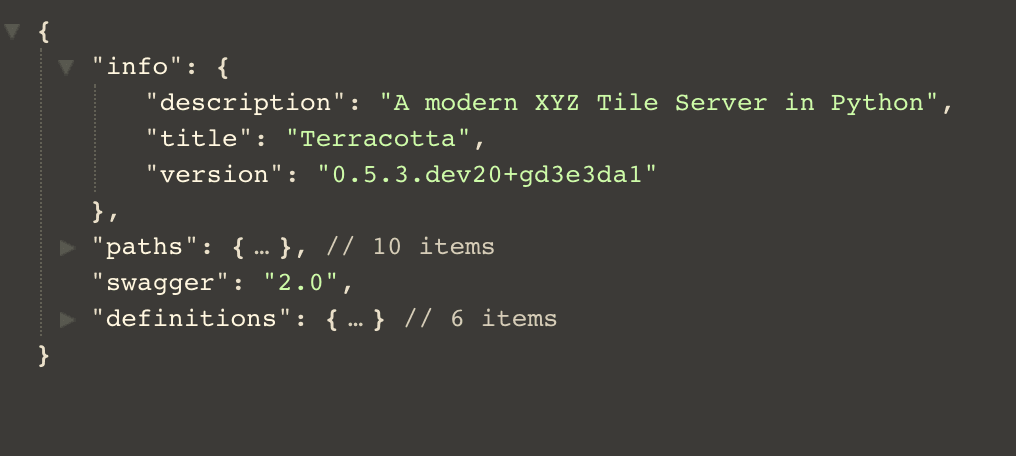

This way, you can see the service description.

$ curl localhost:5000/swagger.json

Of course, you can also use the built-in client of Terracotta to see the effect:

$ terracotta connect localhost:5000

Workflow#

Regarding the choice of header file storage method, SQLite is naturally more convenient, but MySQL offers greater flexibility and stability, allowing for remote injection of online data.

Here I encountered a problem where the driver's create method failed to create. I couldn't figure out what the issue was, so I manually created the required tables by finding the table definitions from the driver.

from typing import Tuple

import terracotta as tc

import pymysql

# driver = tc.get_driver("mysql://root:password@ip-address:3306/tilesbox'")

key_names = ('type', 'date', 'band')

keys_desc = {'type': 'type', 'date': 'data\'s date', 'band': 'raster band'}

_MAX_PRIMARY_KEY_LENGTH = 767 // 4 # Max key length for MySQL is at least 767B

_METADATA_COLUMNS: Tuple[Tuple[str, ...], ...] = (

('bounds_north', 'REAL'),

('bounds_east', 'REAL'),

('bounds_south', 'REAL'),

('bounds_west', 'REAL'),

('convex_hull', 'LONGTEXT'),

('valid_percentage', 'REAL'),

('min', 'REAL'),

('max', 'REAL'),

('mean', 'REAL'),

('stdev', 'REAL'),

('percentiles', 'BLOB'),

('metadata', 'LONGTEXT')

)

_CHARSET: str = 'utf8mb4'

key_size = _MAX_PRIMARY_KEY_LENGTH // len(key_names)

key_type = f'VARCHAR({key_size})'

with pymysql.connect(host='ip-address', user='root',

password='password', port=3306,

binary_prefix=True, charset='utf8mb4', db='tilesbox') as cursor:

cursor.execute(f'CREATE TABLE terracotta (version VARCHAR(255)) '

f'CHARACTER SET {_CHARSET}')

cursor.execute('INSERT INTO terracotta VALUES (%s)', [str('0.5.2')])

cursor.execute(f'CREATE TABLE key_names (key_name {key_type}, '

f'description VARCHAR(8000)) CHARACTER SET {_CHARSET}')

key_rows = [(key, keys_desc[key]) for key in key_names]

cursor.executemany('INSERT INTO key_names VALUES (%s, %s)', key_rows)

key_string = ', '.join([f'{key} {key_type}' for key in key_names])

cursor.execute(f'CREATE TABLE datasets ({key_string}, filepath VARCHAR(8000), '

f'PRIMARY KEY({", ".join(key_names)})) CHARACTER SET {_CHARSET}')

column_string = ', '.join(f'{col} {col_type}' for col, col_type

in _METADATA_COLUMNS)

cursor.execute(f'CREATE TABLE metadata ({key_string}, {column_string}, '

f'PRIMARY KEY ({", ".join(key_names)})) CHARACTER SET {_CHARSET}')

Terracotta's header file storage requires four tables.

| Table | Describe |

|---|---|

| terracotta | Stores Terracotta version information |

| metadata | Stores data header files |

| key_names | Key types and descriptions |

| datasets | Data addresses and (key) attribute information |

The service startup is modified as follows:

[Unit]

Description=Gunicorn instance to serve Terracotta

After=network.target

[Service]

User=root

WorkingDirectory=/mnt/data

Environment="PATH=/root/.conda/envs/gunicorn/bin"

Environment="TC_DRIVER_PATH=root:password@ip-address:3306/tilesbox"

Environment="TC_DRIVER_PROVIDER=mysql"

ExecStart=/root/.conda/envs/gunicorn/bin/gunicorn \

--workers 3 --bind 0.0.0.0:5000 -m 007 terracotta.server.app:app

[Install]

WantedBy=multi-user.target

For injecting local files, you can refer to the following method:

import os

import terracotta as tc

from terracotta.scripts import optimize_rasters, click_types

import pathlib

driver = tc.get_driver("/path/to/data/google/tc.sqlite")

print(driver.get_datasets())

local = "/path/to/data/google/Origin.tiff"

outdir = "/path/to/data/google/cog"

filename = os.path.basename(os.path.splitext(local)[0])

seq = [[pathlib.Path(local)]]

path = pathlib.Path(outdir)

# Call click method

optimize_rasters.optimize_rasters.callback(raster_files=seq, output_folder=path, overwrite=True)

outfile = outdir + os.sep + filename + ".tif"

driver.insert(filepath=outfile, keys={'nomask': 'yes'})

print(driver.get_datasets())

Run as follows

Optimizing rasters: 0%| | [00:00<?, file=Origin.tiff]

Reading: 0%| | 0/992

Reading: 12%|█▎ | 124/992

Reading: 21%|██▏ | 211/992

Reading: 29%|██▉ | 292/992

Reading: 37%|███▋ | 370/992

Reading: 46%|████▌ | 452/992

Reading: 54%|█████▍ | 534/992

Reading: 62%|██████▏ | 612/992

Reading: 70%|██████▉ | 693/992

Reading: 78%|███████▊ | 771/992

Reading: 87%|████████▋ | 867/992

Creating overviews: 0%| | 0/1

Compressing: 0%| | 0/1

Optimizing rasters: 100%|██████████| [00:06<00:00, file=Origin.tiff]

{('nomask',): '/path/to/data/google/nomask.tif', ('yes',): '/path/to/data/google/cog/Origin.tif'}

Process finished with exit code 0

With slight modifications, you can pass in the input filename and output folder name to achieve the workflow of image optimization and injection.